How to move a single partition CosmosDB collection

You might start with a why? Azure CosmosDB is all about scale and one of the keystones to achieve this are partitions. As this is all true and the future, CosmosDB didn't start with a requirement for partitions and there are deployments currently using single partitions. These deployments should be upgraded to use partitions. In my case I had a client with a 3 year old setup that needed to be moved to a new Azure subscription, meaning the move of a CosmosDB still using single partition. As the move should not include any code change, here are my way to move a single partition CosmosDB, as this is still possible.

Using Azure portal, a No-go solution

A first option is to create a new CosmosDB using the Azure Portal. As of today you are required to add a partition key, making this option a no-go.

Using Azure REST API, an option

A second approach is the REST API for CosmosDB. If you need single partition support the API-version should be less than 2018-12-31, meaning 2018-09-17 or earlier. As I tested this approach, the database was already created using the portal. This caused issues even though the correct API version was specified. The reason for this is that the database should also be created using the correct REST API version and not created from the portal. Using the REST API also requires you to generate an authorization signature .

Using Azure CLI

The third option and the one I ended up using is the Azure CLI recommended by Mark Brown here . The Azure CLI is pretty straight forward to use also compared to the REST API.

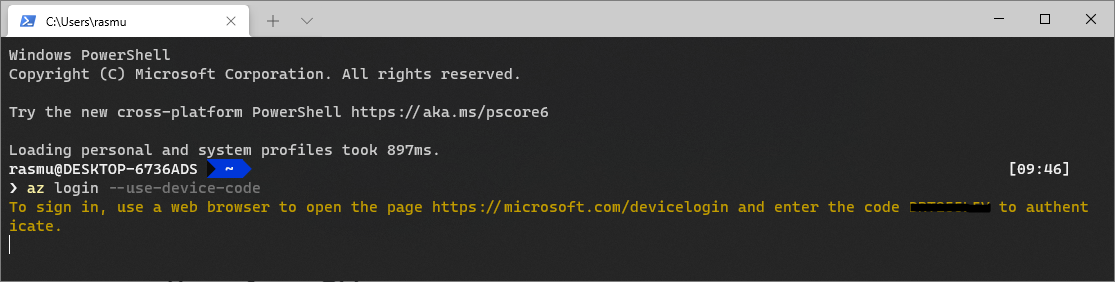

If you have multiple Azure accounts, be aware of the device login option to sign-in. This makes it much easier compared to login using the default web browser etc.

login

az login --use-device-codeUsing windows terminal this command results in a device code. This device code should be pasted into this site

Heads-up deprecated Cosmos API

When you execute the commands below you'll receive a response that the command executed but the command is deprecated. You should not use the new commands as this does NOT support single partitioned collections which is the gold we're aiming for.

Create Cosmos DB account

First we create a CosmosDB account specifying its name and the resource group to create it within.

az cosmosdb create --name cosmos-goldmine-prod --resource-group rg-goldmine-prodCreate CosmosDB database

With the new account we can now create the database.

az cosmosdb database create --name cosmos-goldmine-prod --resource-group rg-goldmine-prod --db-name goldminedbCreate Single partition collection

Finally we're now ready to create the collection (the newer CLI for Cosmosdb uses the term container and not collection).

az cosmosdb collection create --collection-name goldrates --name cosmos-goldmine-prod --db-name goldminedb --resource-group-name rg-goldmine-prod --throughput 1000

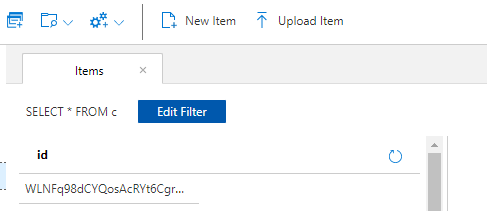

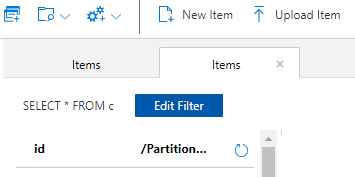

As not partition is specified, meaning we opt-out of creating one, the new collection is created as expected. If you navigate to the CosmosDB inside the azure portal, you'll also see that no partition is displayed. Of course this does not mean you can not have a mix of partitioned and non partitioned collections, inside the same CosmosDB database. Here is another collection from the same database using a partition key

Move data

With a fresh database it's time to move the data. One option is to use Azure Data factory. It's an Azure hosted solution and requires some heavy lifting setting up connections. I tried this option but decided not to continue as the "missing" partition key became an issue.

A complete list of moving data with CosmosDB can be found here.

I decided to use Azure Data Migration tool as an offline solution. This tool offers JSON file support and partition key is only optional.

When specifying the data to import it is important to ensure parallel requests and collection throughput is being set. I increased the throughput a lot for the import. If you have document IDs you are referencing elsewhere its important to disable ID generation during the import too.

Photo by Ian Battaglia on Unsplash